Implementasi Coding Backpropagation menggunakan Python YouTube

GitHub kenkurniawanen/mlpfromscratch Python implementation of MLP backpropagation using

Deep Neural net with forward and back propagation from scratch - Python Read Courses Practice This article aims to implement a deep neural network from scratch. We will implement a deep neural network containing a hidden layer with four units and one output layer.

Implementing Backpropagation From Scratch on Python 3+ by Essam Wisam Towards Data Science

We'll work on detailed mathematical calculations of the backpropagation algorithm. Also, we'll discuss how to implement a backpropagation neural network in Python from scratch using NumPy, based on this GitHub project. The project builds a generic backpropagation neural network that can work with any architecture. Let's get started.

Making Backpropagation, Autograd, MNIST Classifier from scratch in Python by Andrey Nikishaev

Building a Neural Network from Scratch (with Backpropagation) Unveiling the magic of neural networks: from bare Python to TensorFlow. A hands-on journey to understand and build from scratch

GitHub

Sep 23, 2021 In the last story we derived all the necessary backpropagation equations from the ground up. We also introduced the used notation and got a grasp on how the algorithm works. In this story we'll focus on implementing the algorithm in python. Let's start by providing some structure for our neural network

Learn Python from Scratch Python Programming Reviews & Coupon Java Code Geeks

Backpropagation — the "learning" of our network. Since we have a random set of weights, we need to alter them to make our inputs equal to the corresponding outputs from our data set. This is done through a method called backpropagation. Backpropagation works by using a loss function to calculate how far the network was from the target output.

Learn From Scratch Backpropagation Neural Networks using Python GUI & MariaDB by Hamzan Wadi

Backpropagation from Scratch: How Neural Networks Really Work Florin Andrei · Follow Published in Towards Data Science · 16 min read · Jul 15, 2021 How do neural networks really work? I will show you a complete example, written from scratch in Python, with all the math you need to completely understand the process.

How to build your own Neural Network from scratch in Python

Backpropagation is just updating the weights. In straightforward terms, when we backpropagate we are basically taking the derivative of our activation function. You will improve when I'll.

Backpropagation from Scratch in Python

The back-propagation algorithm is iterative and you must supply a maximum number of iterations (50 in the demo) and a learning rate (0.050) that controls how much each weight and bias value changes in each iteration. Small learning rate values lead to slow but steady training.

How to Implement the Backpropagation Algorithm From Scratch In Python LaptrinhX

Welcome to a short tutorial on how to code Backpropagation Algorithm for scratch.Using the Backpropagation algorithm, the artificial neural networks are trai.

GitHub

Aug 9, 2022 This article focuses on the implementation of back-propagation in Python. We have already discussed the mathematical underpinnings of back-propagation in the previous article linked below. At the end of this post, you will understand how to build neural networks from scratch. How Does Back-Propagation Work in Neural Networks?

Backpropagation From Scratch With Python Pyimagesearch www.vrogue.co

How to Code a Neural Network with Backpropagation In Python (from scratch) Difference between numpy dot() and Python 3.5+ matrix multiplication CHAPTER 2 — How the backpropagation algorithm works

GitHub Neural Network Backpropagation

A python notebook that implements backpropagation from scratch and achieves 85% accuracy on MNIST with no regularization or data preprocessing. The neural network being used has two hidden layers and uses sigmoid activations on all layers except the last, which applies a softmax activation.

How to Code a Neural Network with Backpropagation In Python (from scratch)

The backpropagation algorithm works in the following steps: Initialize Network: BPN randomly initializes the weights. Forward Propagate: After initialization, we will propagate into the forward direction. In this phase, we will compute the output and calculate the error from the target output.

Deep Learning with Python Introduction to Backpropagation YouTube

Backpropagation is considered one of the core algorithms in Machine Learning. It is mainly used in training the neural network. And backpropagation is basically gradient descent. What if we tell you that understanding and implementing it is not that hard?

Implementing Backpropagation in Python Building a Neural Network from Scratch Andres Berejnoi

The backpropagation algorithm is a type of supervised learning algorithm for artificial neural networks where we fine-tune the weight functions and improve the accuracy of the model. It employs the gradient descent method to reduce the cost function. It reduces the mean-squared distance between the predicted and the actual data.

Backpropagation from scratch with Python PyImageSearch

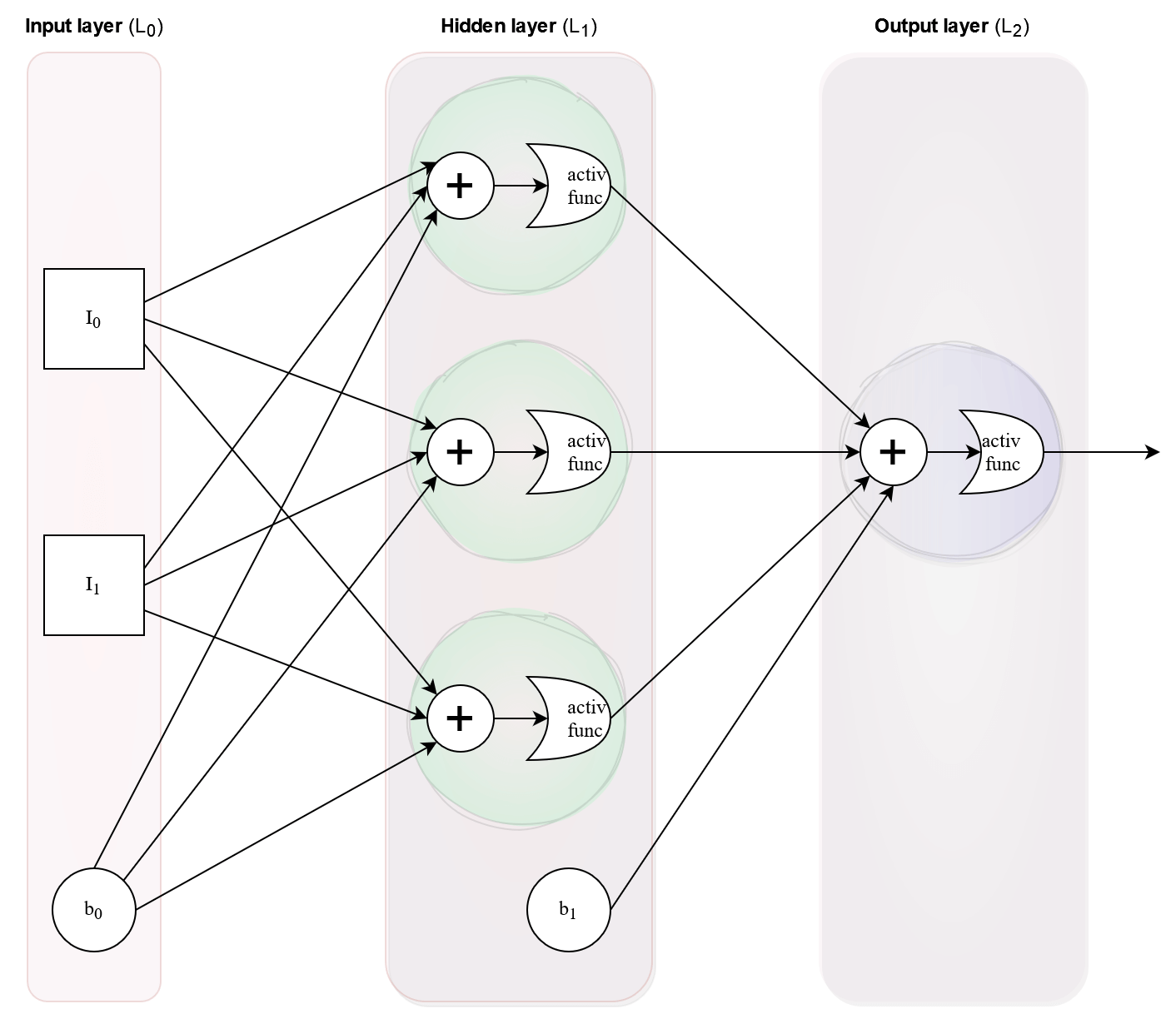

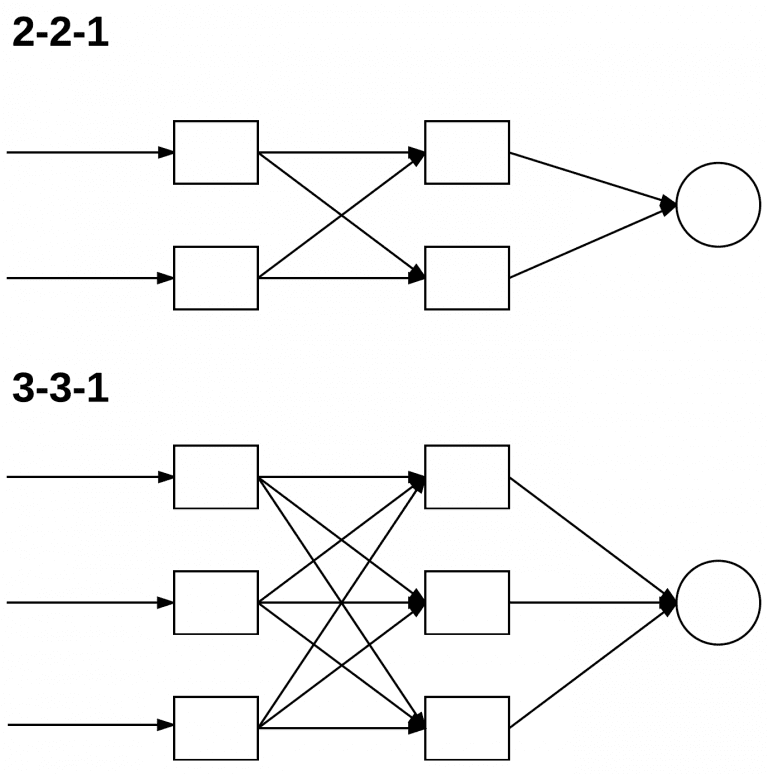

Technically, the backpropagation algorithm is a method for training the weights in a multilayer feed-forward neural network. As such, it requires a network structure to be defined of one or more layers where one layer is fully connected to the next layer. A standard network structure is one input layer, one hidden layer, and one output layer.